Planning and conducting user testing to identify aspects of the user experience that could be optimised.

I conducted all aspects of the study, including creating a test plan and script, conducting the user testing sessions, analysing the results and presenting insights back to stakeholders.

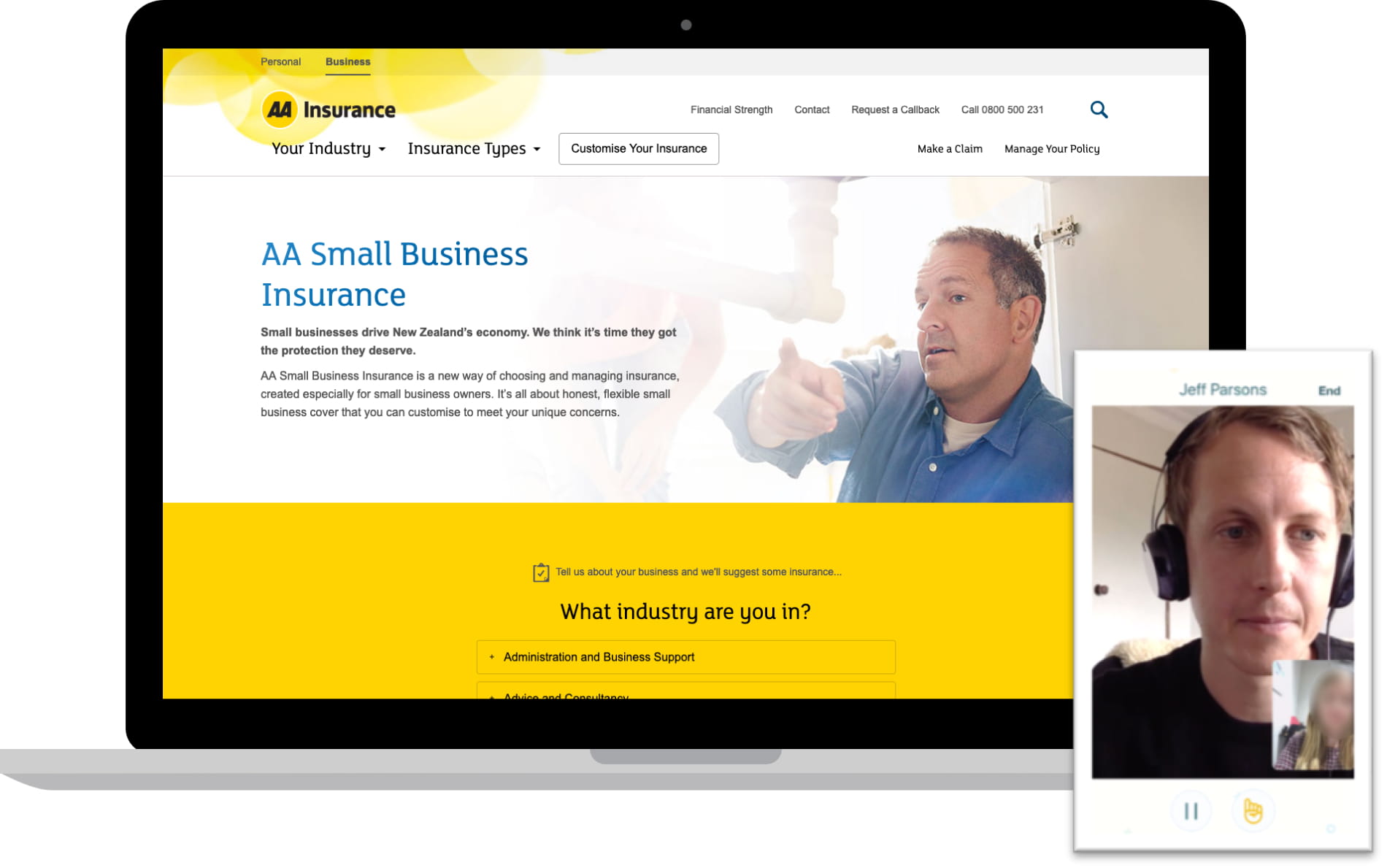

A new area of the AA Insurance website was created, offering insurance to small businesses with the ability to get a quote and buy it online. After the website was launched, I conducted user testing to uncover and understand potential usability issues.

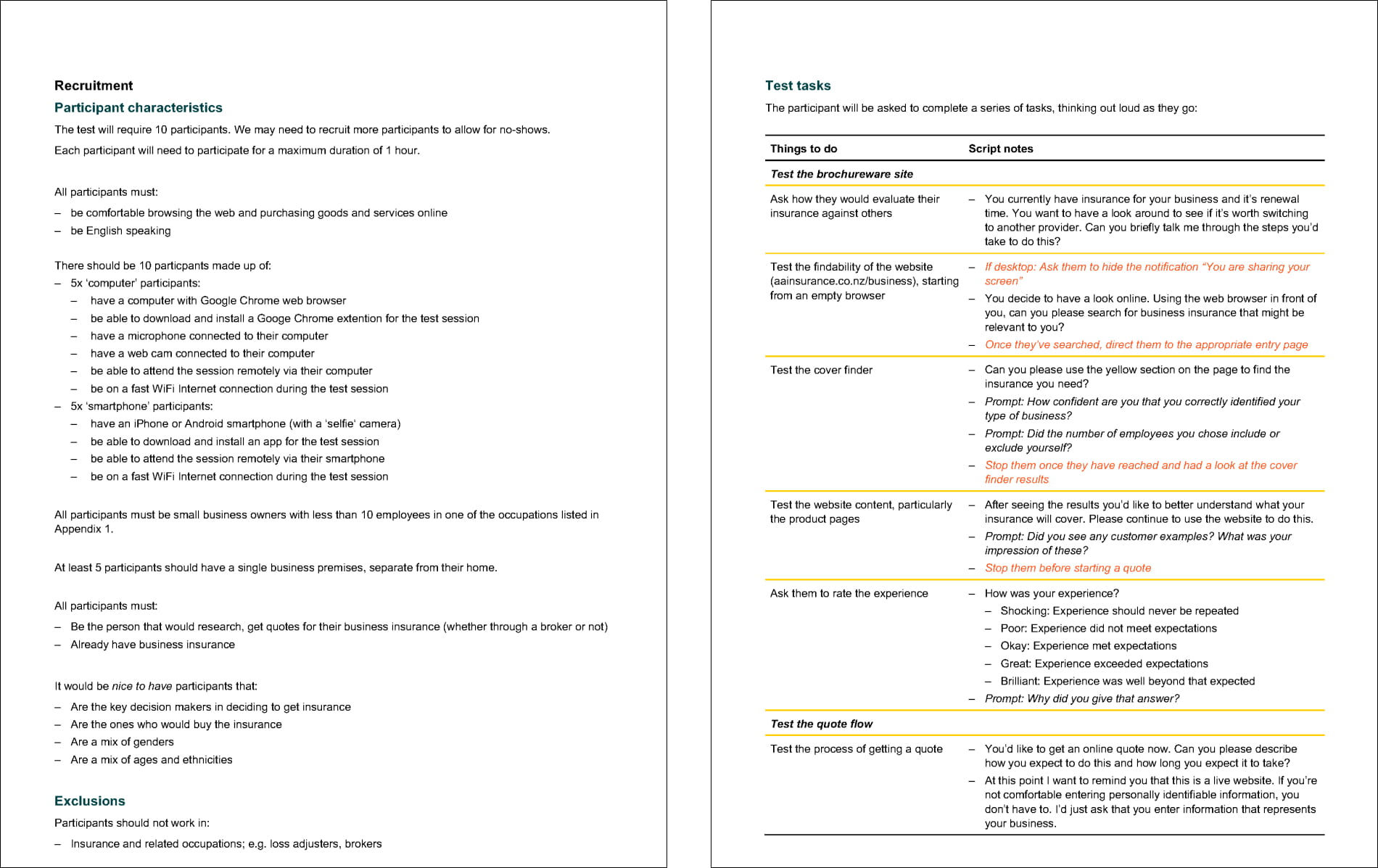

I wrote a comprehensive test plan detailing the objectives and methodology of the study, as well as a detailed script with predefined tasks and questions. Having a robust plan ensured user testing sessions were consistent and covered off the key tasks to be assessed. A recruitment agency was used to source participants with characteristics that would resemble target users.

I worked with a UX Researcher to analyse existing research studies, Voice of the Customer survey data, analytics data and conduct user interviews. We learned all about the wider experience of advisers outside the interactions with our brand.

With this insight, we then created design principles to help us focus on designing a product experience that would suit the greater context—complementing the different ways advisers run their businesses, supporting them in providing excellent service to their clients, and beating our competition on experience.

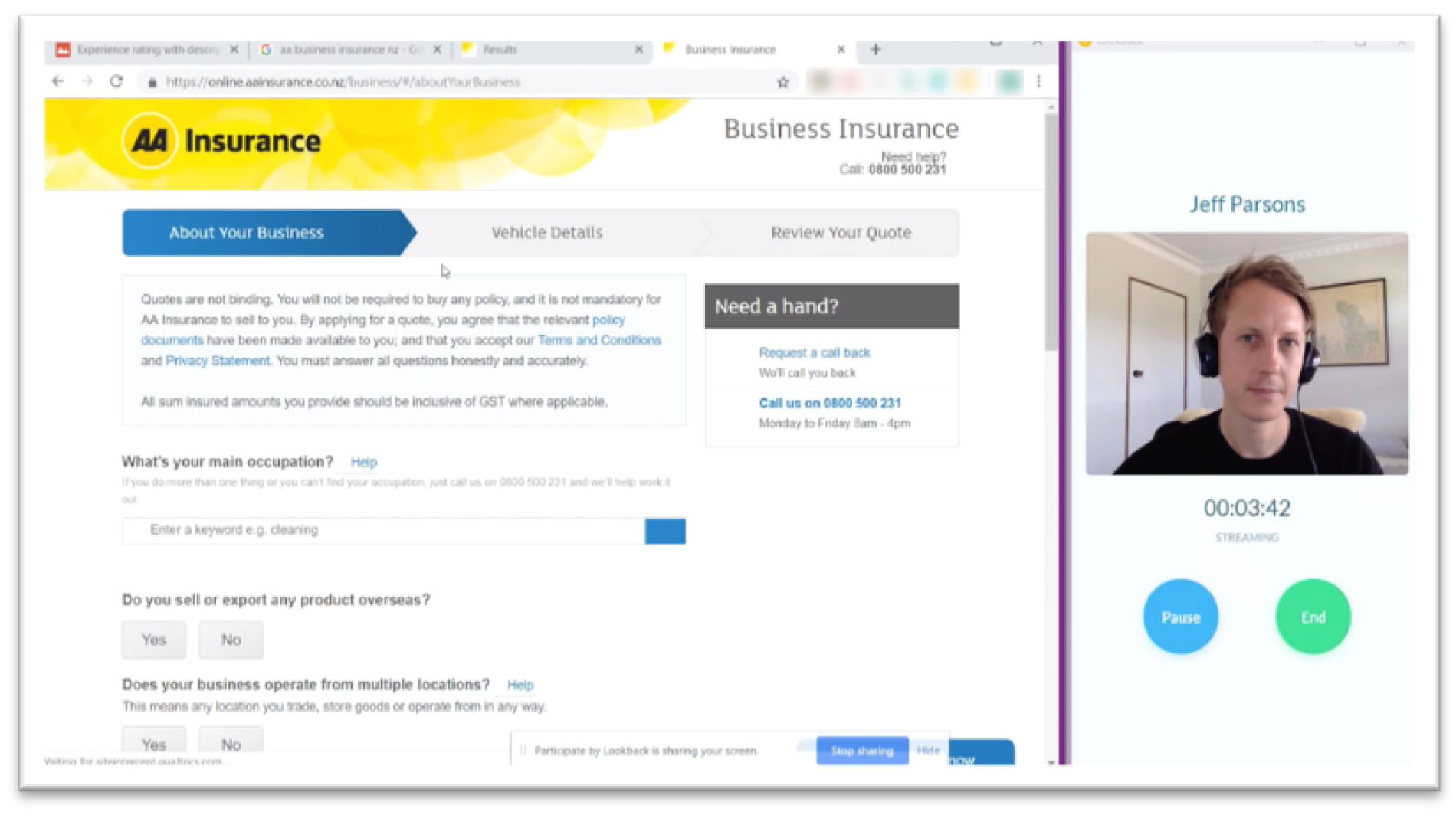

The testing was conducted remotely with Lookback—an online tool that allowed me to talk to and observe participants using their own computers and smartphones. Remote testing in some ways resembles field studies, in that you can observe participants in their own environment.

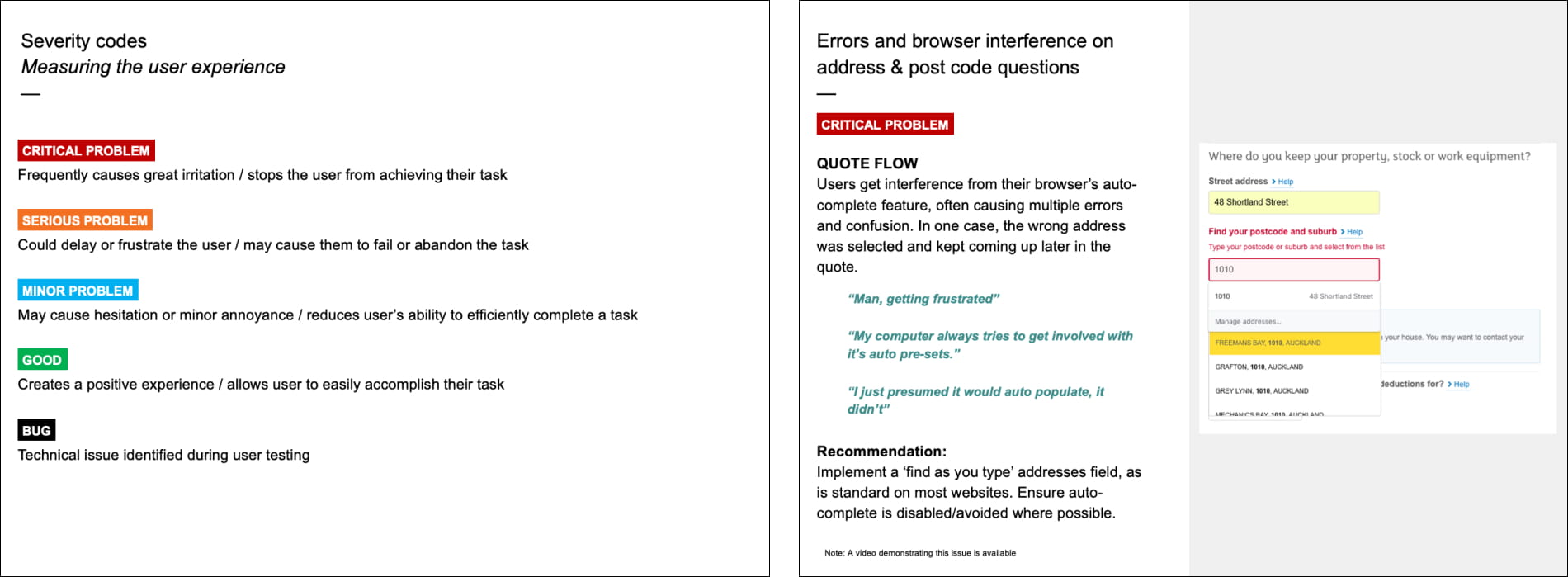

I asked participants to complete three tasks, which resembled a simplistic user flow of browsing available products, answering questions to get a quote, and reviewing that quote. This allowed me to assess different parts of the site and identify elements that hesitation, errors, confusion, and fatigue.

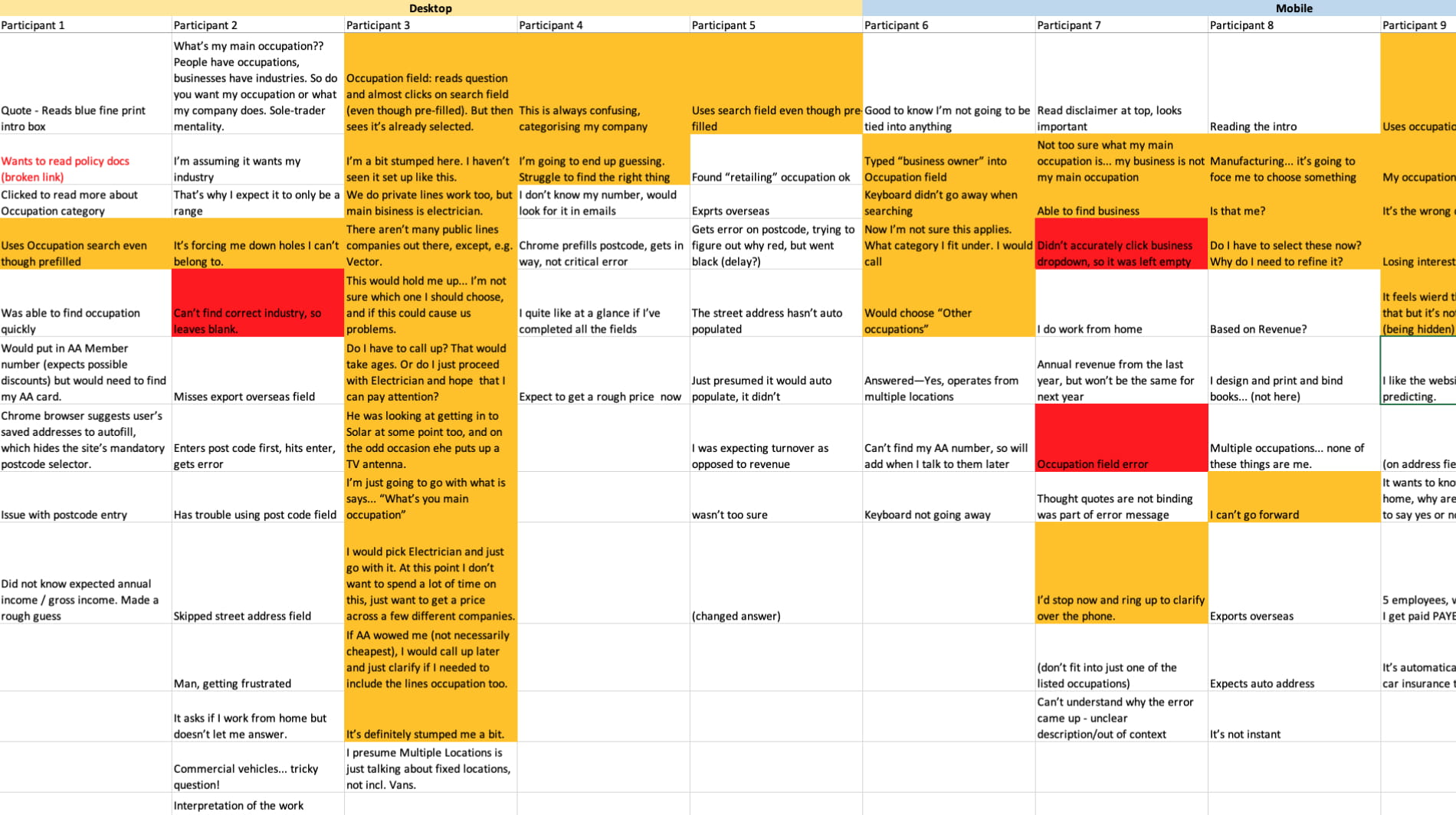

During the sessions repeat issues were already emerging, but once the user testing sessions had concluded, I compiled all my notes in a spreadsheet to look for patterns and additional themes.

One finding was that while participants were forgiving of the first few minor issues they encountered, the issues had a cumulative effect, building on top of each other and contributing to users losing their patience and confidence.

The learning was that these minor issues should not be ignored, especially when viewed together as part of a single experience.

This was my first experience with remote user testing and

so was slightly experimental in that sense. I was encouraged that it turned out to be a viable alternative to in-person testing—the technology worked well enough and it enabled the inclusion of participants who would otherwise be excluded due to location and availability during the day.

However, the controlled environment you get with in-person user testing reduces the technical hiccups that remote user testing is more susceptible to.